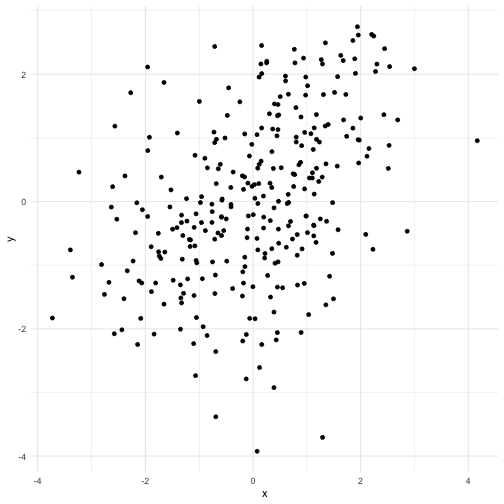

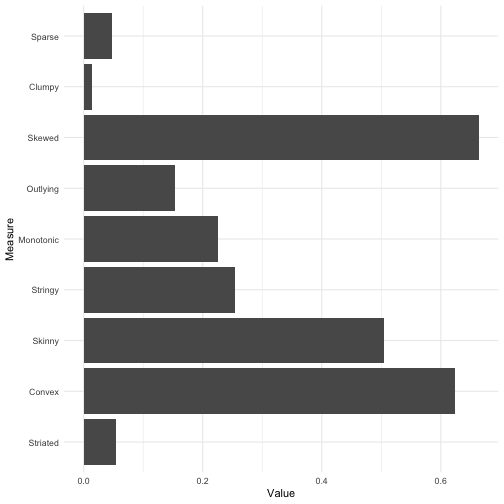

# The classic wolf-husky classifier .center[<img src="imgs/husky_wolf_sbs.jpg" style="width: 60%">] .center[Is it a husky or a wolf?<br><br>] .center[<img src="https://c.tenor.com/5v0B7L4LZzMAAAAM/confused-huskies.gif">] --- class: center, middle ### Let's take a look at what the model is actually looking at by using -- ## <span style="color:rgb(213,94,0)">**I**</span>nterpretable <span style="color:rgb(213,94,0)">**M**</span>achine <span style="color:rgb(213,94,0)">**L**</span>earning methods --- class: center, middle # It's actually a snow detector 🤯 <img src="imgs/wolf_husky_lime.png" style="width:80%"> .footnote[<small> Source: [Explaining Black-Box Machine Learning Predictions - Sameer Singh, Assistant Professor of Computer Science, UC Irvine](https://papers.nips.cc/paper/2019/hash/bb836c01cdc9120a9c984c525e4b1a4a-Abstract.html)</small>] --- class: center,middle # But who will watch over the watchers? <img src="imgs/xai_dog_fail.png" style="width:50%"> .footnote[<small> Source: [_Explanations can be manipulated and geometry is to blame_ A.K. Dombrowski NeurIPS 2019](https://papers.nips.cc/paper/2019/hash/bb836c01cdc9120a9c984c525e4b1a4a-Abstract.html)</small>] --- class: middle, center ### We are reluctant to use IML methods ### because of these drawbacks <br> <hr style="width:50%"> <br> -- ### But what would happen ### if we didnt keep on using them? <br> <hr style="width:40%"> <br> --- ### The Apple Card algorithm fiasco <!-- .pull-left[<img src="imgs/apple_card_bias_2.png" style="width:100%">] --> <!-- .pull-right[<img src="imgs/apple_card_bias_1.png" style="width:100%">] --> .center[ <!-- <br><br><br> --> <img src="imgs/apple_card_bias.png" style="width:100%"> ] -- ### And more issues... .pull-left[ .center[ <img src="imgs/COMPAS_bias_updated.png" style="width:90%"> ] ].pull-right[ .center[ <img src="imgs/face_detect_bias.png" style="width:100%"> ] ] --- class: center, middle ### What if we explored the ### explainability power of IML methods ### to understand them better? --- class: center, middle, first-slide, inverse <span style="font-family: 'Playfair Display', serif; font-weight: 500; font-size: 36pt"> Transparency, auditability, and explainability of interpretable machine learning models </span> <hr style="width:60%;margin: 0 auto;"/> .pull-left[ <span style="font-family: 'Lato', sans-serif;font-size: 24pt;font-weight:400;"> Janith Wanniarachchi <br/> <span style="font-weight:400;font-size: 18pt;">BSc. Statistics (Hons.)</span><br> <span style="font-weight:300;font-size: 17pt;">University of Sri Jayewardenepura</span> </span> ] .pull-right[ <span style="font-family: 'Lato', sans-serif;font-size: 24pt;font-weight:400;"> Dr. Thiyanga Talagala <br/> <span style="font-weight:400;font-size: 18pt;">Supervisor</span><br> <span style="font-weight:300;font-size: 17pt;">PhD, Monash University, Australia</span><br> </span> ] --- class: center, middle # How are we going to do this? We are going to explore the land of IML methods and test out their explainability powers. <img src="https://c.tenor.com/VjTYO49uzqwAAAAC/hobbit-bilbo.gif" style="width:120%"> --- class: center,middle .center[ ## But wait, ### If we are going to test out IML methods ### Then we need a way to quantify the structure of the data ] -- .center[ ### And then ## We need a way to generate data by quantifying the structure of the data ] --- ## Can you interpret the relationships? .center[ <img src="imgs/scag_splom_2var.png" style="width:20%"> ] --- ## How about now? .center[ <img src="imgs/scag_splom_5var.png" style="width:40%"> ] --- ### What if we could look at a scatterplot in a different way? .center[ <img src="imgs/scag_splom_full.png" style="width:70%"> ] --- ### The answer? Scagnostics! .pull-left[ <img src="imgs/leland_prof_pic.png" style="width:120%" /> The late Leland Wilkinson developed graph theory based scagnostics that quantifies the features of a scatterplot into measurements ranging from 0 to 1 ] <!-- .pull-left[From this:<img src="imgs/scag_splom.png">] --> <!-- .pull-right[To this:<img src="imgs/scag_scag_splom.png">] --> .pull-right[<img src="imgs/scag_measures_concat.jpg" style="width:100%">] --- class: middle .pull-left[ ### The first Scatterplot in the matrix <!-- --> ] .pull-right[ ### The scagnostics <!-- --> ] --- class: center, middle ## How do we generate data from this? -- ### We need to reverse this! -- Earlier given `\(N\)` number of `\((X,Y)\)` coordinate pairs we got a `\(9 \times 1\)` vector of scagnostic values -- Now when we give a `\(9\times 1\)` vector of scagnostic values we need to get `\(N\)` number of `\((X,Y)\)` data points! -- ## But the equations aren't one to one functions! --- class: center, middle, first-slide <img src="imgs/ice_cream_sprinkles.jpg" style="position:absolute;top:0px;left:0px;object-fit:fill;scale: 140%;object-position: 0px 90px;"> --- # Inspiration can come at the hungriest moments The idea actually came to me while having desert .center[<img src="https://c.tenor.com/KnbkykkOK04AAAAC/ice-cream-sprinkle.gif" style="width:40%">] >Why don't I first sprinkle a little bit of data points on a 2D plot, >making sure that they land in the right places > and add on top of those sprinkles (data points) > keep on adding more sprinkles > so that the final set of sprinkles (data points) looks good! --- # Simulated Annealing .pull-left[ <img src="http://www.turingfinance.com/wp-content/uploads/2015/05/Annealing-700x536.jpg" /> The name of the algorithm comes from annealing in material sciences, a technique involving heating and controlled cooling of a material to alter its physical properties. ] .pull-right[ The algorithm works by setting an initial temperature value and decreasing the temperature gradually towards zero. As the temperature is decreased the algorithm becomes greedier in selecting the optimal solution. In each time step, the algorithm selects a solution closer to the current solution and would accept the new solution based on the quality of the solution and the temperature dependent acceptance probabilities. <img src="https://upload.wikimedia.org/wikipedia/commons/d/d5/Hill_Climbing_with_Simulated_Annealing.gif" /> ] --- # The algorithm .center[<img src="imgs/scatteR_flowchart.png" style="width:60%">] --- class: center, middle # Introducing <img src="imgs/scatteR.png" style="width:40%"/> .footnote[<small>Install and try it out for yourself from https://github.com/janithwanni/scatteR</small>] --- ## Data Generating Method ✅ .pull-left[ ```r library(scatteR) library(tidyverse) df <- scatteR(measurements = c("Monotonic" = 0.9),n_points = 200,error_var = 9) qplot(data=df,x=x,y=y) ``` ] .pull-right[ <img src="imgs/scatter_example_plot_1.png" /> ] .pull-left[ ```r scatteR(c("Convex" = 0.9),n_points = 250,verbose=FALSE) %>% # data generation mutate(label = ifelse(y > x,"Upper","Lower")) %>% # data preprocessing ggplot(aes(x = x,y = y,color=label))+ geom_point()+ theme_minimal()+ theme(legend.position = "bottom") ``` ] .pull-right[ <img src="imgs/scatter_example_plot_2.png" /> ] <!-- <img src="imgs/scatteR_samples.png" style="width:95%"> --> --- # IML methods come in different flavors .pull-left[ ### Global interpretability methods Gives an overall bird's eye view of the entire model * Partial Dependency Plots * Inidividual Conditional Expectation plot * Accumulated Local Effects plot ] .pull-right[ ### Local interpretability methods Explains the reasoning behind a single instance * LIME * SHAP ] .center[ <img src="imgs/global_v_local.png"> ] --- class: center, middle ### Data Generating Method ✅ ### Background idea on IML Methods ✅ <hr style="width:50%"> <br><br> # Let's dive in! --- class: middle ### The experimental design .center[ <img src="imgs/DOE.png" style="width:80%"> ] --- # PDP Curves The partial dependence plot (short PDP or PD plot) shows the marginal effect one or two features have on the predicted outcome of a machine learning model. A partial dependence plot can show whether the relationship between the target and a feature is linear, monotonic or more complex. <img src="imgs/iml_pdp.png"> --- # Let the two middle men talk .pull-left[ .center[ <img src="imgs/middle_men_meme.jpg" style="width:70%"> ] The surrogate model based on the black box model says: <span style="color:rgb(86,180,233)">*"This is the function I think the model is approximating"*</span> The surrogate model based on the pdp model says: <span style="color:rgb(0,158,115)">*"This is the function I think the PDP curve is explaining"*</span> ] .pull-right[ .center[ We build a PDP curve on top of the model to get two curves for the two classes `\(\text{Pr}(\hat{Y} = 1)\)`, `\(\text{Pr}(\hat{Y} = 0)\)` We can build a surrogate logistic regression model that gives `\(ln\Big(\frac{Pr(\hat{Y} = 1)}{Pr(\hat{Y} = 0)}\Big) = \mathbf{\beta}X\)` What if we divided the two PDP curves and took the logarithm of it and performed linear regression? After all logistic regression is simply linear regression for log odds ratio right? ]] --- # Automatic IML method evaluation using Surrogate models and PDP curves <img src="imgs/surrogate_model_results.png"> --- class: center, middle .center[ ## Now onto local interpretability methods ] --- .pull-left[ ### LIME A good explanation should satisfy two criteria * Interpretability * Local fidelity <img src="imgs/lime_intro.png" /> ] .pull-right[ ### SHAP SHAP is based on game theoretic Shapley values. The Shapley value is the average marginal contribution of a feature value across all possible combinations of features. The idea is to distribute the difference of the prediction and the average prediction among the features. <img src="imgs/shap_intro.png" /> ] --- # Good Old MNIST <img src="imgs/mnist_median_labels.png"> --- ## Random Forest vs Convolutional Neural Network ### Which to choose? Both models performed really well yet one incorporated spatial information while the other did not .pull-left[<img src="imgs/rf_conf_mat.png">] .pull-right[<img src="imgs/cnn_conf_mat.png">] --- ### SHAP explanations from both parties .center[ Random Forest <img src="imgs/mnist_shap_rf.png"> Convolutional Neural Network <img src="imgs/mnist_shap_cnn.png"> ] --- ### LIME explanations from both parties .center[ Random Forest <img src="imgs/mnist_lime_rf.png"> Convolutional Neural Network <img src="imgs/mnist_lime_cnn.png"> ] --- # But what about the real world? .center[<img src="imgs/xray_image_samples.png" style="width:60%"/>] * We selected a dataset of 100 X-ray images of body parts with 5 classes. * The images were resized to fit the memory constraints * Based on our computing budget a pretrained Mobilenet-v3 model was fine tuned on the X-ray images * The model and a sample of images was used to calculate LIME and SHAP values * The results gave us a lot of insights to further extend our research --- class: center, middle # We made it back home! -- ## What did we learn from our journey together? --- .pull-left[ ### LIME limitations * Perturbation is not good when there is a model misspecification * If there are correlated features then the explanations might not be sensible * Needs GPU support ] .pull-right[ ### SHAP limitations * Doesn't consider groupings of features in the way LIME does * Exact values are hard to calculate and approximations require a lot of computing power * Needs GPU support ] ### Overlall conclusions * IML methods should not be used simply because the model performed well. * Pay attention to the structure of your data and the specification of your model that is where everything lies. * Be careful around IML methods that use perturbation underneath and inspect some of those perturbations before you send them out. * Look at the overall interpretation given from your IML method per class and see whether they match up. * Also take a look at how certain the model is of your predictions, if the model is not sure then the IML method won’t be of any use. * It’s still a growing field and with good advancements in the right directions, perhaps we can make ML models not so alien to us in the future! --- class: center, middle <br/> # Thank you for listening! <br/> ### A huge thank you to my supervisor <img src="https://pbs.twimg.com/profile_images/1459464211713691652/SqkWoTBr_400x400.jpg" style="border-radius:200px;width:20%"> Dr. Thiyanga Talagala Check out her work on Github [@thiyangt](https://github.com/thiyangt/) <br> --- class: center, middle <h2 style="color:#5b85aa">Have any follow up questions?</h2> Email: [janithcwanni@gmail.com](#) Twitter: [@janithcwanni](https://twitter.com/janithcwanni) Github: [@janithwanni](https://github.com/janithwanni) Linkedin: [Janith Wanniarachchi](https://www.linkedin.com/in/janith-wanniarachchi-462851117/) <hr style="width:40%"> Try scatteR at [https://github.com/janithwanni/scatteR](https://github.com/janithwanni/scatteR) --- class: center, middle # Glad you asked --- # How does scagnostics work? <img src="imgs/scag_pieces.png"> --- <img src="imgs/scag_orig_paper.png"> --- # Acknowledgements The following creators are accredited to the images and content that are referenced in these slides. * Image of Ice cream sprinkles (Slide #17): Photo by <a href="https://unsplash.com/@calavera?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText">David Calavera</a> on <a href="https://unsplash.com/@calavera?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText">Unsplash</a> * Image of Annealing (Slide #19): [http://www.turingfinance.com/simulated-annealing-for-portfolio-optimization/](http://www.turingfinance.com/simulated-annealing-for-portfolio-optimization/) * Global and Local interpretations (Slide #23): [https://www.kdnuggets.com/2018/06/human-interpretable-machine-learning-need-importance-model-interpretation.html](https://www.kdnuggets.com/2018/06/human-interpretable-machine-learning-need-importance-model-interpretation.html) * PDP curves (Slide #26): [https://christophm.github.io/interpretable-ml-book/pdp.html](https://christophm.github.io/interpretable-ml-book/pdp.html) * SHAP diagram (Slide #30): [https://github.com/slundberg/shap](https://github.com/slundberg/shap)